Vansh Tibrewal

vtibrewa [at] caltech [dot] edu

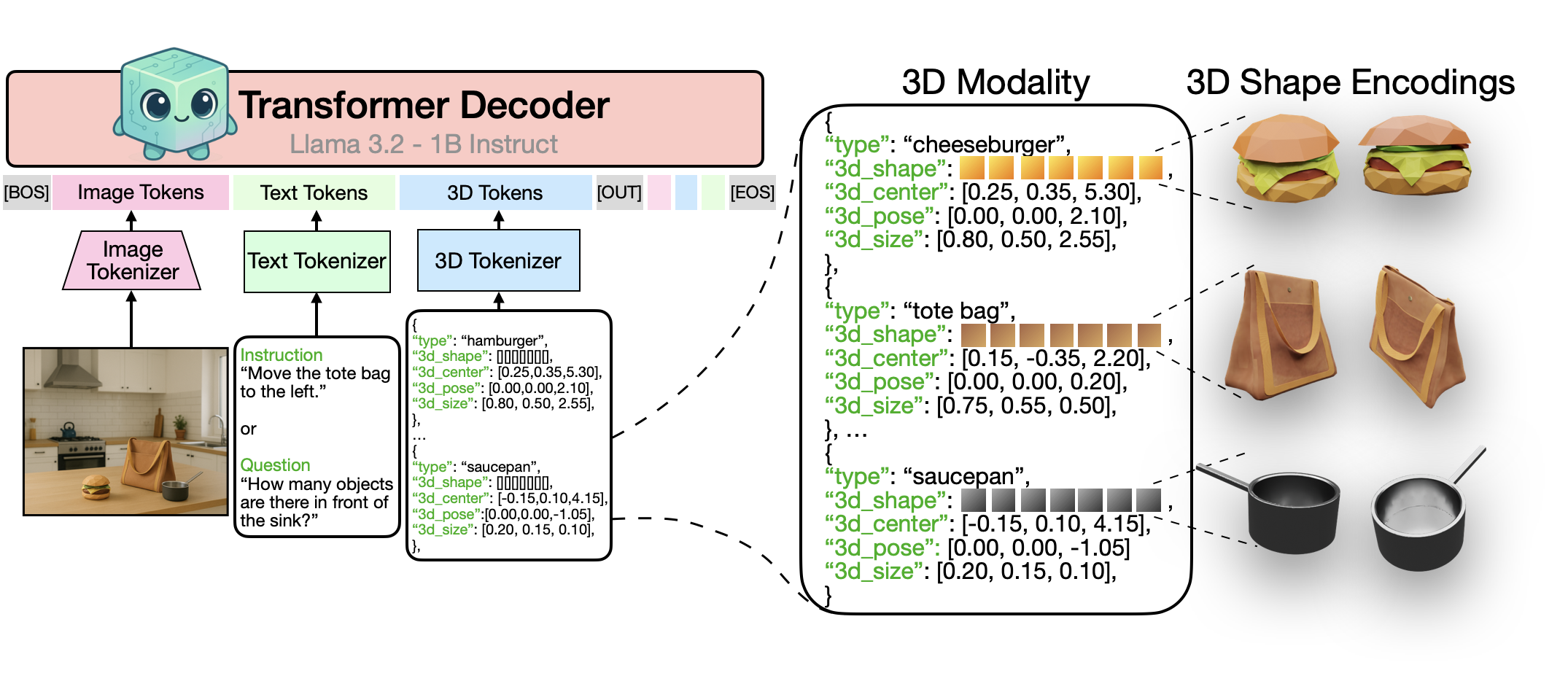

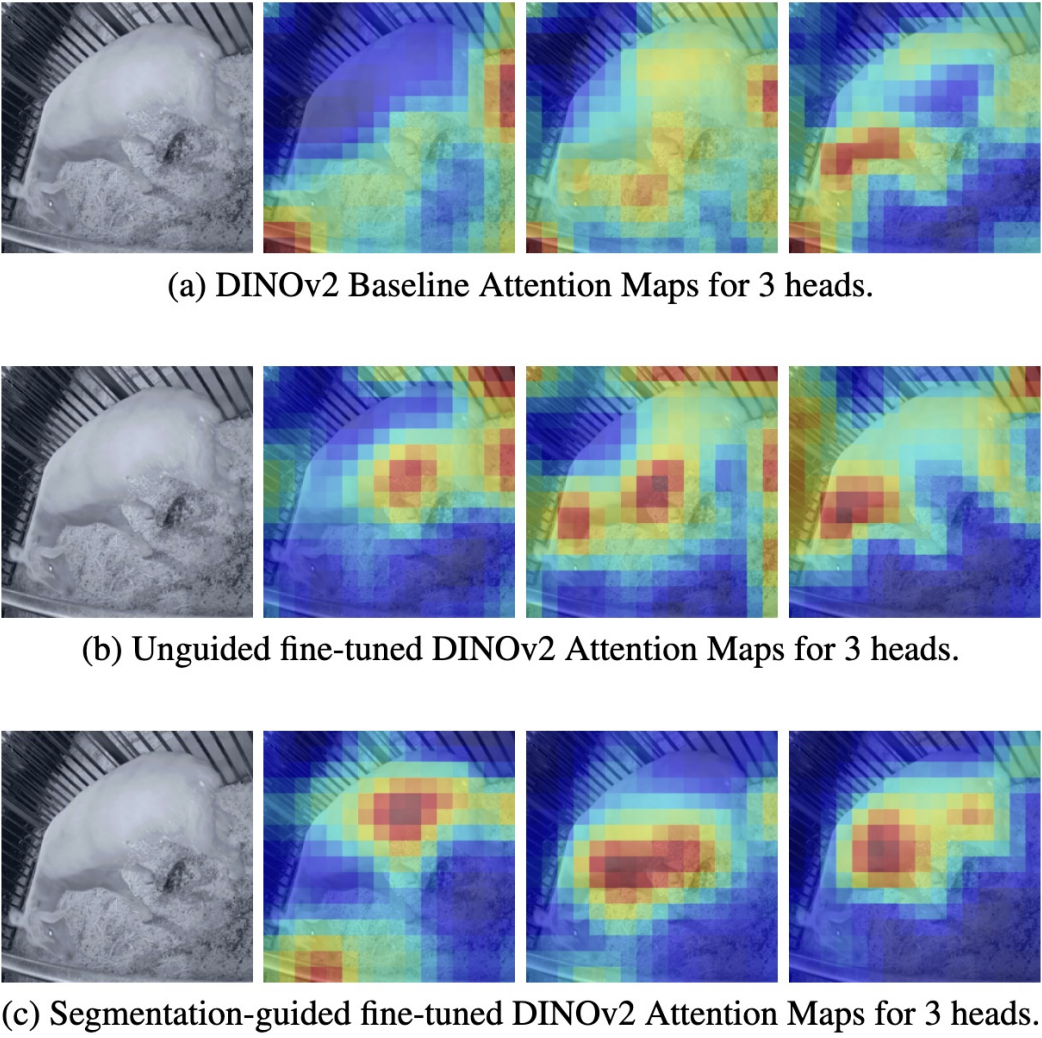

I’m a senior at Caltech majoring in Computer Science. Currently, I am a member of Professor Georgia Gkioxari’s lab at Caltech. Previously, I worked in Professor Pietro Perona’s Vision Lab and before that in Professor Anima Anandkumar’s AI + Science Lab.

Last summer, I was a Research Engineer Intern at Citadel working on the Core Data team.

My interests lie at the intersection of vision, multimodality, and representation learning. My primary research goal is to build machines with robust multimodal representations of the world to model, interact with and learn from it.

Publications

-

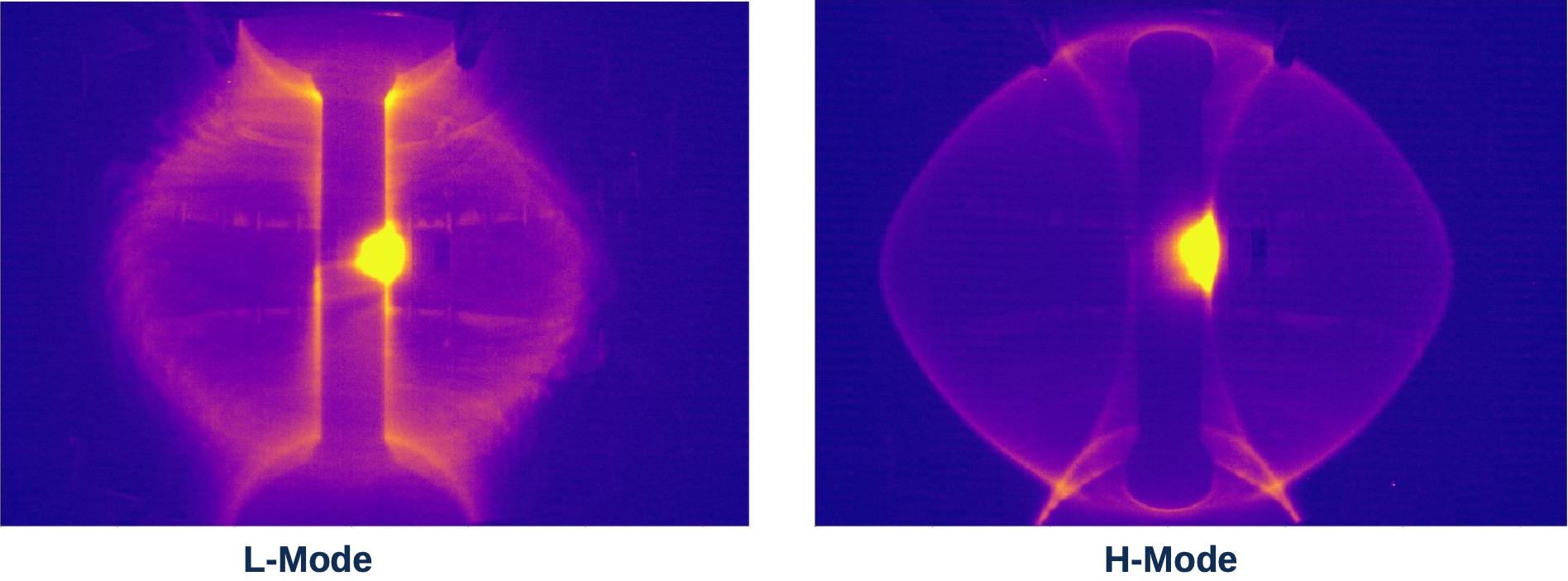

Using Machine Learning over Fast Camera Images to Classify the Plasma Confinement Mode within the MAST Experiment49th EPS Conference on Plasma Physics, 2023

Using Machine Learning over Fast Camera Images to Classify the Plasma Confinement Mode within the MAST Experiment49th EPS Conference on Plasma Physics, 2023